Terraform is a popular IaC tool which is specifically useful to model and configure resources on different cloud infrastructures. With Terraform, DevOps teams use human-readable code to automate cloud tasks, deploy applications, and set up and manage cloud resources like networks, storage, servers and a bulk of other services.

Infrastructure as Code agitates that whatever is being created or provisioned in an infrastructure should be provisioned through code to be executed without any manual or human intervention.

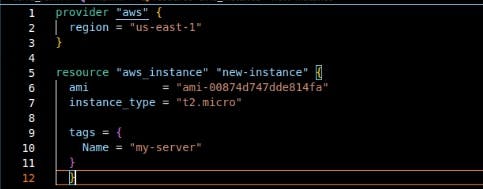

Terraform is written in HCL(Hashicorp Configuration Language) and its language syntax is very easy to write and read with full-scale documentation to help anyone get started with terraform easily.

Terraform is an infrastructure automation tool which helps to define deployment networks, also supporting deployment across multiple cloud environments.

Designed decoratively, terraform does not just take instructions but also enables engineers to design what they desire within an infrastructure and compare it with a desired result before the actual deployment.

Components of Terraform Infrastructure provisioning

Providers

Providers are public and private cloud platforms where the infrastructures will be created and resources deployed. The provider plugin for each cloud provider has all the necessary codes which enable us to talk about a specific set of APIs from the provider based on the provider's configuration file.

Providers are distributed by Hashicorp and are divided into different tiers:

Official providers: these include the major public cloud service providers such as AWS, Azure, GCP, etc. In terraform, these providers are maintained directly by Hashicorp.

Verified providers: these providers are owned and maintained by third-party organizations through a partnership with Hashicorp. Some of them include Digital Ocean, Heroku, etc.

Community providers: these are providers which are maintained by individual contributors within the Hashicorp community.

Region

Regions in the cloud are availability zones where the cloud providers have data centres. By default, AWS assigns a region that is closest to us.

Resource

A resource is every infrastructural item that can be created, commissioned and managed in terraform. These resources differ from platform to platform.

It is easy to create infrastructure resources with terraform because it uses the same syntax across all the providers. What worked in AWS will work for the digital ocean, GCP, Azure, etc.

In provisioning resources, the name of the provider, the resources type, and the name of the resource to be deployed into the infrastructure are the first values to be specified in the form of a key-value pair within the code file followed by other configuration options.

Terraform Workflow

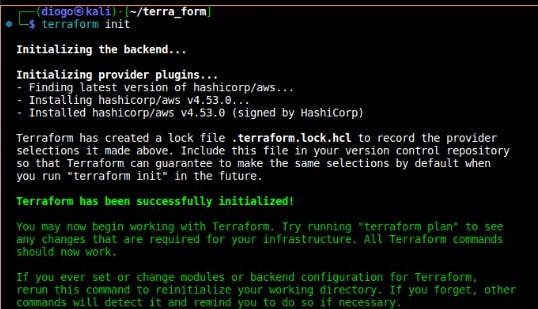

- Initialization: Just like in other VCS, the basic step in terraform is to initialize the working directory to enable terraform to begin tracking the contents and all that has been defined in the code files within the working directory, especially the providers and download all the modules, plugins which are necessary to terraform enable terraform interact with the necessary APIs and set up the backend where terraform state files will be stored.

Initialize the working directory using $ terraform init

Through this initialization, all the providers specified in the code get initialised. You can confirm that the working directory has been initialised through the presence of the hidden files which are bearing all the terraform configurations for the working directory.

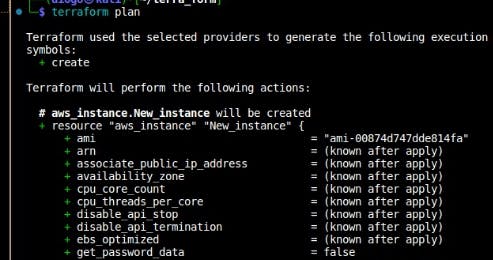

Terraform plan: after a directory has been initialised, the terraform plan is the next command to follow. This does a dry-run of the code files to show the resources that will be deployed or the changes that will take place by querying the provider’s API to give a detail of what actions will take place. The purpose of this is to bring users to an awareness of what they are about to do so that they will not accidentally provision, modify or delete resources. $ terraform plan

From the output, the plus (+) signs show all that will be created. If there is a minus(-) sign, it should be for all that will be deleted after some modification has been made.

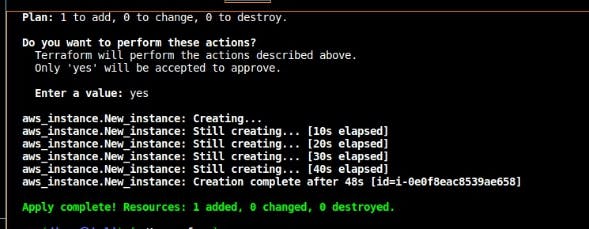

Terraform apply: this command takes the code, reviews it and deploys the resources in real-time to the cloud service provider specified. $ terraform apply –auto-approve

Terraform destroy: this command is useful to delete the resources provisioned on the infrastructure, it shows all the changes that are going to be made before destroying the entire infrastructure. It destroys everything within the desired state of the configuration but does not destroy the code itself.

Terraform Architecture and Filesystem

During initialization, there are files which are being created in terraform without user intervention.

.terraform - this is the file that contains an installation of all the necessary plugins and installation for the cloud provider specified within the code.

Without the presence of that file in the working directory, terraform cannot make any API request from the provider and the resources cannot be deployed on the infrastructure.

Lockfile: the terraform lockfile contains specific dependencies and providers that are installed within it, tracking the module used. The lockfile downloads and bundles up the modules used in the terraform codes to make it reusable.

Terraform.tfstate - this file represents all the states for terraform, presenting its data in a JSON format. Through this file, terraform keeps track of everything being created in terraform.

This is the engine of the terraform configurations mapping out the sequence of steps executed whenever terraform is executed.

It contains information about every resource and data object deployed through terraform. In the statefile, there are blocks which respond to resources and also blocks which respond to data.

The statefile contains secrets and other sensitive information about the infrastructure authentication and authorization. This is why it is highly necessary to encrypt it and avoid checking it in a public repository.

Storing the Terraform Statefile

The statefile can be stored both locally and remotely within cloud platforms.

- Local storage:

This is stored within a machine, like an individual laptop where the terraform code is written. This makes it easy to access the statefile but extremely dangerous becomes the statefile within the working directory in a local machine is stored in plain text and can be accessed easily by anyone who gains access to the machine.

- Remote storage:

This is storing terraform statefiles in remote servers of cloud platforms like terraform cloud, Amazon S3 bucket, google cloud storage, etc. these remote storage facilities are highly secure with predefined permissions and authentication features.

Statefiles stored within a remote backend are encrypted and backed up for easy retrieval promoting teamwork and remote collaboration and can easily be integrated with CI/CD pipelines and other environments.

The only disadvantage is that it could be complex to set up and expensive.